Orchestration Postdoc Blog (OPDB) #3

Project Blog | Postdoc | Jason Noble | March 1, 2019

Between Speech and Music: Composing for the Guitar with Dialectal Patterns

Before my PhD, I played guitar a little bit: enough to accompany myself at open mic, but not enough to compose for it seriously. I never really felt like I got the instrument: I found it hard to visualize what is possible in terms of fingering, and I didn’t have a clear mental image of the vast range of timbral variations of which the instrument is capable.

Then one day, rising star guitarist and fellow Newfoundlander Steve Cowan wrote me to ask if I would compose a piece for him. I had heard of Steve, but we didn’t know each other. I quickly agreed, eager to learn more about the guitar. Neither of us could have envisioned the extent of the collaboration on which we were about to embark. Steve and I share an interest in the musicality of speech: the subtle melodic inflections, amazingly intricate rhythmic patterns, and almost endless variety of timbral variations that we take for granted every time we chat with our neighbour. This seemed like a promising starting point for our collaboration.

Our province of Newfoundland and Labrador is home to perhaps the greatest regional diversity of spoken English in North America (https://dialectatlas.mun.ca/), but like dialects all over the world, that diversity is diminishing over time as mass media and easier travel reduce the isolation of rural communities. We decided to tour the island of Newfoundland and record interviews with residents, while our province’s treasury of distinct speech patterns is still a living reality. We would then use those recordings as source material for musical creation. Our interviewees gave us 18 hours of incredibly rich material to work with, showcasing the contrasts between dialect families of Irish, British, Scottish, and French ancestral heritage.

We delivered a public lecture recital detailing our process in McGill’s Research Alive series (2017; https://www.youtube.com/watch?v=wAJ0UX3t4Bs). As we discuss in the video, there are many ways to create sonic hybrids between speech and music. One of the most intuitive is melodic transcription, which Steve Reich uses in his masterpiece Different Trains (1988; https://www.youtube.com/watch?v=pZRBfRXJyak). New technologies allow us to more precisely analyze speech sounds and recreate them with musical instruments, as Peter Ablinger does with his amazing “talking piano” in pieces like Deus Cantando (2009; https://www.youtube.com/watch?v=BzcBusxDThM). Digital manipulations open up whole new worlds of synthetic speech-derived sounds, as Trevor Wishart brilliantly demonstrates in pieces like Encounters in the Republic of Heaven (2010; http://www.trevorwishart.co.uk/encounters.html). We adopted methods similar to these, applying them to the dialectal patterns and narrative content of our recorded interviews.

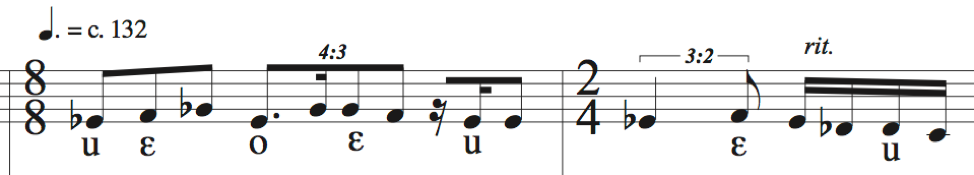

Since Research Alive, we have begun to explore ways to emulate speech in music that are specific to the guitar. In particular, we discovered ACTOR partner Caroline Traube’s research about how different pluck positions on guitar strings produce different timbres that are perceptually similar to vowels in speech (https://www.researchgate.net/publication/4087847_Timbral_analogies_between_vowels_and_plucked_string_tones). In our new piece we never told nobody (2019), the guitarist rapidly changes pluck positions indicated by IPA vowel symbols below the staff (a guide is provided in the performance notes to help the performer learn which pluck positions correspond to which vowels).

This piece will be premiered in Steve’s doctoral recital on March 11th at McGill University, https://www.facebook.com/events/720904368305824/ along with performances of two other pieces I’ve composed for him in which we explore the crossover soundworld between speech and music.

by Jason Noble